This explains why the development of specialized Deep Learning hardware has recently come into focus. In the future, new chip and memory architectures will enable the use of high performance hardware components that save energy and, at the same time, expand the use of Deep Learning, for example to autonomous vehicles, mobile phones, or integrated production controls.

Learning Does Not Require High Precision in Numerical Processing

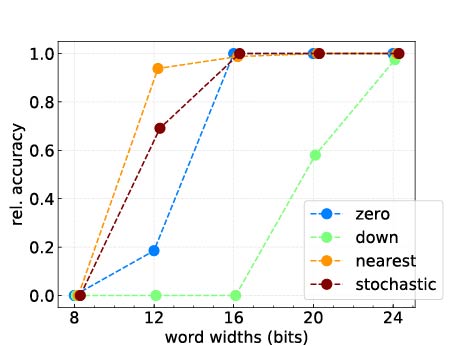

We exploit a mostly mathematical feature of Deep Learning: Learning and evaluating models can be reduced to a numerical computation of a small number of operations using tensor-algebra (for example, matrix-multiplications). In addition, tensor calculation works well with much less precision in terms of numerical processing than it is typically the case with physical simulations. In comparison to general computational units such as CPUs and GPUs, these features enable a highly efficient hardware implementation.

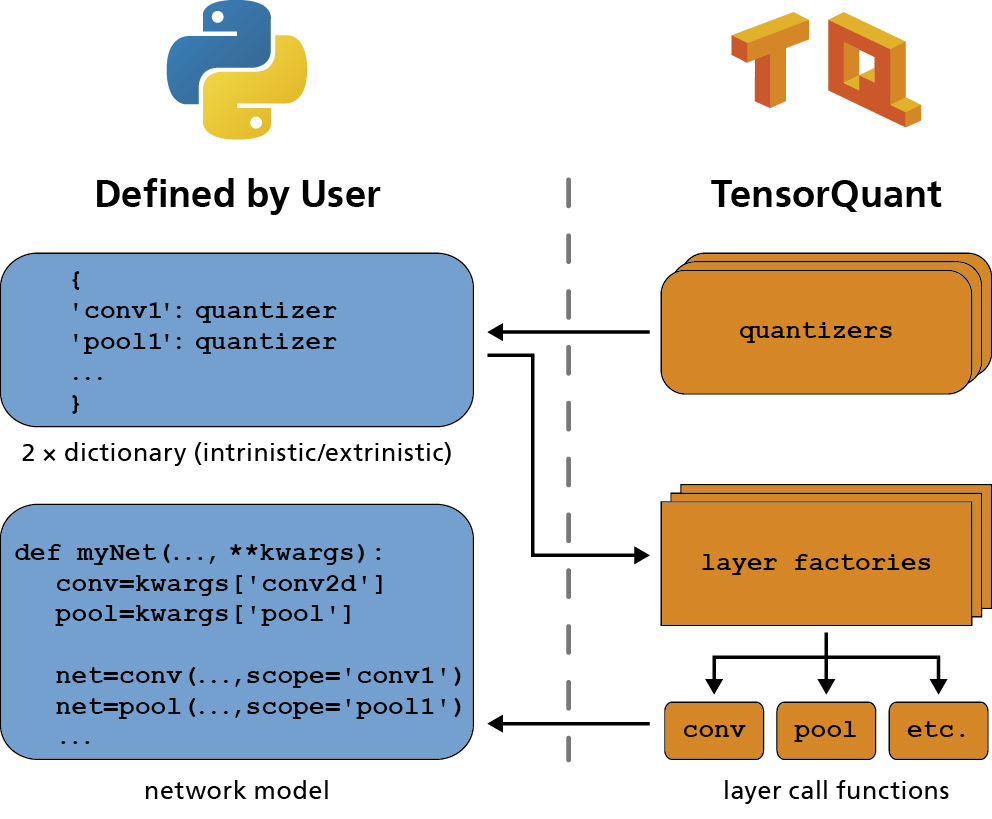

TensorQuant Allows the Simulation of Machine Learning Hardware

In the development of Deep Learning applications on specialized hardware, difficulties are encountered as the minimum requirements for computational precision vary significantly between the individual models. As a result, the simultaneous optimization of Deep Learning models and hardware is difficult in terms of computational performance, power consumption, and predictive accuracy. Our TensorQuant software lets developers identify critical tensor operations and emulate Deep Learning models with numerical processing and computing accuracy, which in effect accelerates development. TensorQuant has already been used in collaborative research projects with the automobile industry.